An artificial retina would be a tremendous boon to the many people with visual impairments, and the possibility is getting closer to reality every year. One of the latest developments is taking a different and promising approach, using tiny points that convert light into electricity. Virtual reality has shown that this could be a workable way to go.

These photovoltaic retinal prostheses come from the École polytechnique fédérale de Lausanne, at which Diego Ghezzi has been working for several years.

Early retinal prostheses were made decades ago. The basic idea is as follows: a camera outside the body (e.g. on glasses) sends a signal over a wire to a tiny microelectrode array made up of many tiny electrodes pierce the non-functioning retinal surface and stimulate the working cells directly.

The main problems with this are that powering and sending data to the array requires a cable that runs from the outside of the eye inwards – generally a “not” when it comes to prosthetics and the body in general. The array itself is also limited in the number of electrodes by the size of the individual electrodes, which means that the effective resolution, at best, has been on the order of a few tens or hundreds of “pixels” for many years. (The concept cannot be translated directly due to the way the visual system works.)

Ghezzi’s approach avoids both of these problems by using photovoltaic materials that convert light into electricity. It’s not that different from what happens in a digital camera, except that the charge is not recorded as it is in the picture, but rather the current is directed into the retina, as was the case with the powered electrodes. No cable is required to carry power or data to the implant as both are provided by the light shining on it.

Credit: Alain Herzog / EPFL

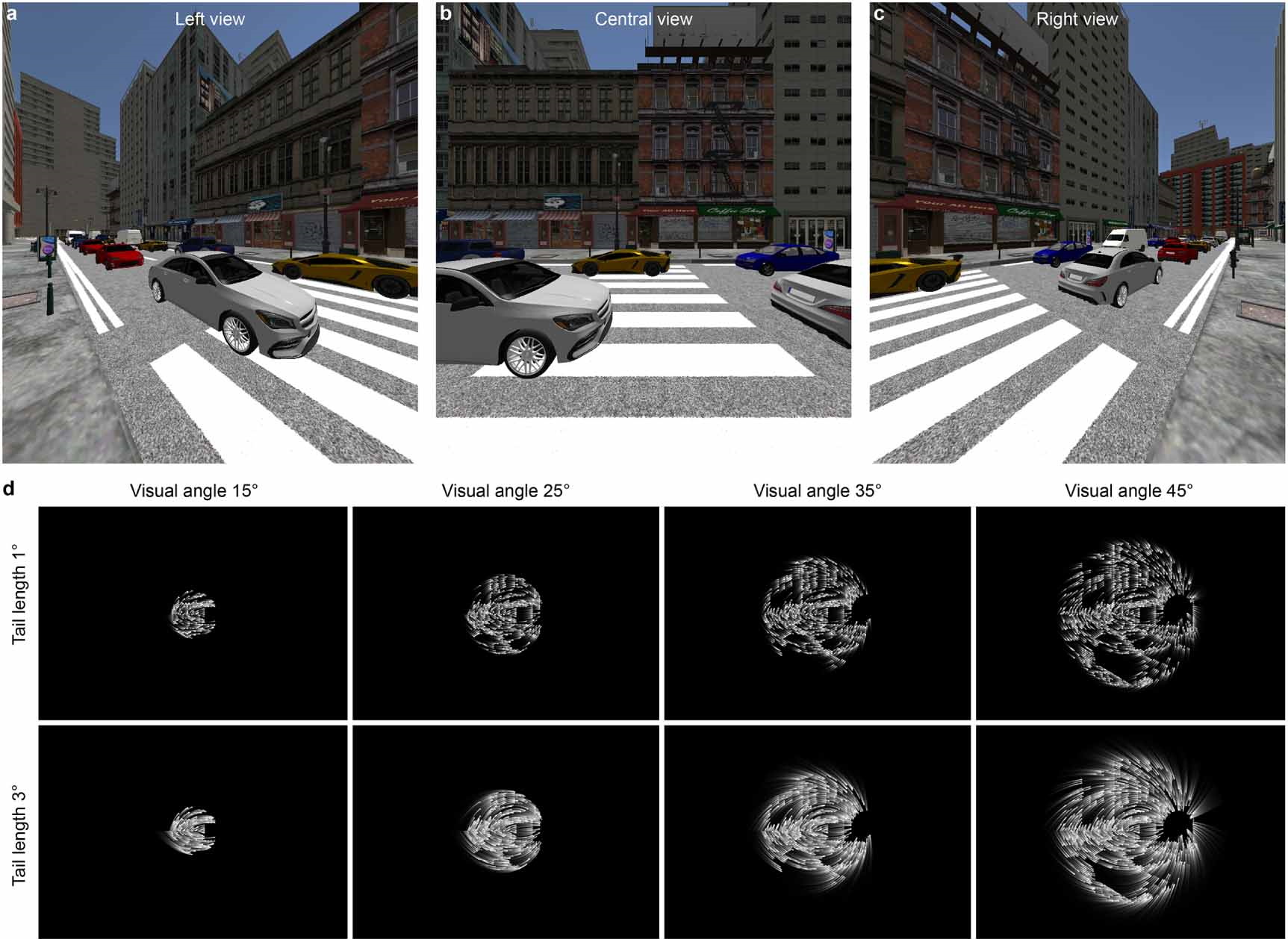

The EPFL prosthesis has thousands of tiny photovoltaic points that would theoretically be illuminated by a device outside the eye that lets in light as detected by a camera. Of course, it’s still incredibly difficult to construct. The other part of the setup would be glasses that take an image and project it through the eye onto the implant.

We first heard of this approach back in 2018, and things have changed a bit since then as new paper documents.

“We increased the number of pixels from around 2,300 to 10,500,” Ghezzi explained in an email to .. “Now it’s difficult to see them individually and they look like a continuous film.”

Of course, when these points are pressed directly against the retina, it’s a different story. After all, that’s only 100 × 100 pixels or so if it were a square – not exactly high definition. The idea, however, is not to replicate human vision, which can be an impossible task at first, let alone realistic for everyone’s first shot.

“Technically, it is possible to make pixels smaller and denser,” explained Ghezzi. “The problem is that the generated current decreases with the pixel area.”

![]()

The current decreases with the pixel size, and the pixel size is initially not exactly large. Credit: Ghezzi, et al

The more you add, the harder it is to get it to work, and there is also a risk (which they tested) of having two neighboring points stimulating the same network in the retina. But too little and the created image may not be understandable for the user. 10,500 sounds like a lot, and it might be enough – but the simple fact is that there is no data to support it. To begin with, the team turned to a seemingly unlikely medium: VR.

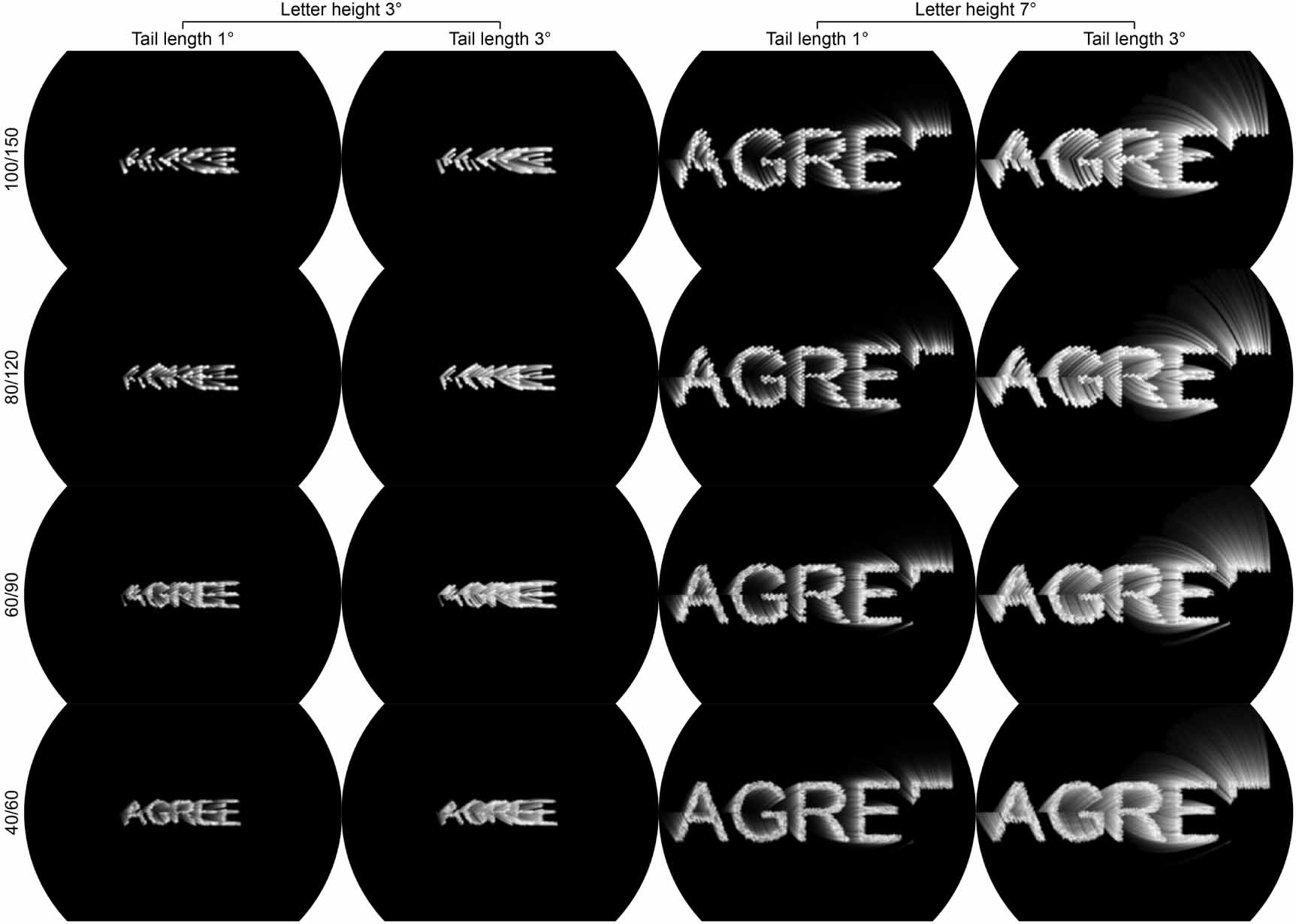

Because the team can’t exactly “test” install an experimental retinal implant on people to see if it worked, they had to use other means to determine if the device’s dimensions and resolution were used for certain everyday tasks, such as object spotting would be enough and letters.

Credit: Jacob Thomas Thorn et al

To do this, they put people in dark VR environments, with the exception of small simulated “phosphors”, the pinpricks of light that they want to create by stimulating the retina via the implant. Ghezzi compared what humans would see to a constellation of bright, changing stars. They varied the number of phosphors, the area over which they appear and the length of their illumination or “tail” when the image shifted, and asked participants how well they could perceive things like a word or a scene.

Credit: Jacob Thomas Thorn et al

Their main finding was that the most important factor was the angle of view – the overall size of the area in which the image appears. Even a clear picture is difficult to understand if it is just the center of your line of sight. Even if general clarity suffers, it is better to have a wide field of view. The robust analysis of the visual system in the brain allows things like edges and movements to be recognized even with sparse inputs.

This demonstration showed that the parameters of the implant are theoretically correct and the team can work towards human trials. This cannot be done that quickly, and while this approach shows great promise compared to previous wired approaches, even in the best-case scenario it will be several years before it can potentially be made widely available. However, the prospect of a functioning retinal implant of this type is exciting and we will be closely following it.

Comments are closed.